Big data technologies are revolutionizing how industries operate by enabling organizations to harness vast amounts of data for strategic advantage. In today’s digital landscape, these technologies play a crucial role in sectors such as finance, healthcare, and retail, allowing them to analyze patterns, enhance decision-making, and improve customer experiences. Over the past decade, big data technologies have evolved significantly, adapting to the growing demands for data processing and analysis.

This transformation has fostered the emergence of numerous tools and frameworks designed to optimize data storage, processing, and analytics, providing businesses with powerful resources to excel in their fields.

Introduction to Big Data Technologies

Big data technologies play a crucial role in today’s digital landscape, enabling organizations to process and analyze vast amounts of data swiftly and effectively. The significance of these technologies lies in their ability to transform raw data into meaningful insights, driving informed decision-making. Industries such as finance, healthcare, retail, and telecommunications are increasingly leveraging big data technologies to enhance operational efficiency, improve customer experiences, and gain competitive advantages. Over the past decade, the evolution of big data technologies has been remarkable, transitioning from simple data storage solutions to sophisticated analytics tools that incorporate artificial intelligence and machine learning.

Key Components of Big Data Technologies

The core components of big data technologies encompass data storage, processing, and analytics. These components are essential for managing and deriving insights from large datasets. Below is a detailed table listing popular tools used in each component, along with their main features:

| Component | Tool | Main Features |

|---|---|---|

| Data Storage | Hadoop HDFS | Distributed file system, fault tolerance, scalability |

| Data Storage | Amazon S3 | Scalable storage, data security, easy access |

| Data Processing | Apache Spark | Real-time processing, in-memory computing, ease of use |

| Data Processing | Apache Flink | Stream processing, event time processing, stateful computations |

| Data Analytics | Tableau | Data visualization, user-friendly interface, real-time dashboards |

| Data Analytics | Power BI | Interactive reports, integration with Microsoft products, data modeling |

Databases play a pivotal role in big data technologies, particularly in the context of NoSQL vs. SQL databases. NoSQL databases, such as MongoDB and Cassandra, are designed to handle unstructured data and provide high scalability. In contrast, SQL databases like MySQL and PostgreSQL offer structured data management and complex querying capabilities. Each type has its advantages, depending on the specific requirements of the big data application.

Data Collection Methods

Effective data collection is the foundation of any big data project. Various techniques and tools are utilized for gathering data, including sensors, web scraping, and APIs. Best practices for effective data collection include ensuring data relevance, maintaining consistency, and adhering to ethical guidelines. Additionally, the importance of data quality and integrity during the collection phase cannot be overstated, as it directly impacts the reliability of the analysis results.

- Define clear objectives for data collection to stay focused.

- Utilize automated tools for web scraping to enhance efficiency.

- Ensure compliance with data privacy regulations.

- Regularly validate and clean collected data to maintain quality.

Big Data Processing Frameworks

In big data technologies, there are significant differences between batch processing and real-time processing. Batch processing involves processing large volumes of data at once, making it ideal for applications such as data warehousing and reporting. In contrast, real-time processing allows data to be processed as it is generated, which is crucial for applications requiring immediate insights, such as fraud detection.

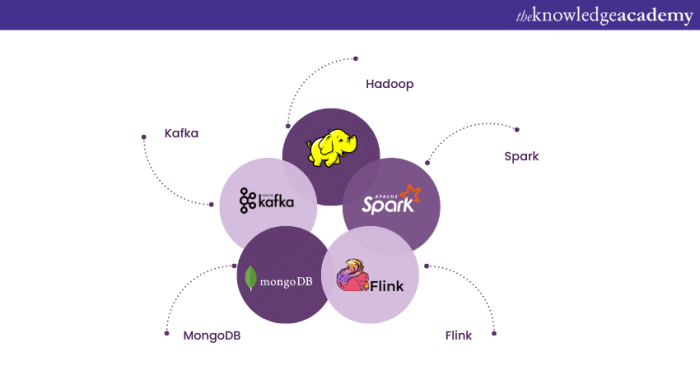

Popular big data processing frameworks include Apache Hadoop and Apache Spark. Each framework has specific architectures that cater to different processing needs. Below is a comparison table highlighting the advantages and disadvantages of each processing framework.

| Framework | Advantages | Disadvantages |

|---|---|---|

| Apache Hadoop | Scalable, cost-effective, fault-tolerant | Slower processing speed, complex setup |

| Apache Spark | Fast processing, in-memory computing, versatile | Higher resource consumption, complexity in management |

Data Analysis Techniques, Big data technologies

Data analysis techniques play a crucial role in extracting insights from big data. Machine learning and predictive analytics are among the most common techniques utilized in big data technologies. Algorithms such as linear regression, decision trees, and clustering methods are frequently used to analyze large datasets and predict future trends. Visualization tools like Tableau and Power BI are essential for understanding the outcomes of big data analytics, as they help transform complex data into comprehensible visual formats.

- Linear Regression: Used for predicting continuous outcomes based on linear relationships.

- Decision Trees: Useful for classification tasks and handling complex datasets.

- K-Means Clustering: Helps in grouping similar data points for insights.

Challenges in Big Data Technologies

Implementing big data technologies comes with its own set of challenges. Key issues include data privacy and security concerns, as organizations must ensure that sensitive information is protected against breaches. Additionally, there is a notable skill gap in the workforce related to big data technologies, with a shortage of professionals proficient in advanced analytics and data management tools.

To overcome these challenges, organizations can adopt several strategies:

- Invest in employee training programs to build necessary skills.

- Implement robust data governance frameworks to ensure compliance.

- Utilize automated tools to enhance data security measures.

Future Trends in Big Data Technologies

Emerging trends in big data technologies include the integration of artificial intelligence and the rise of edge computing. AI enhances data analytics capabilities, allowing for more accurate predictions and automated decision-making. Edge computing, on the other hand, facilitates data processing closer to the source, reducing latency and bandwidth usage.

The implications of these trends for businesses are profound. Organizations can expect improved operational efficiency, enhanced customer experiences, and data-driven decision-making processes. Predictions for the future landscape of big data technologies over the next five years suggest an increased reliance on real-time data analytics and the expansion of AI capabilities in data processing and analysis.

Conclusive Thoughts

In conclusion, the journey through big data technologies reveals not only their current impact on various industries but also the challenges and future trends that will shape their evolution. As organizations continue to adapt to the fast-paced digital world, understanding and leveraging these technologies will be essential for maintaining a competitive edge. The future promises exciting developments, with innovations like AI integration and edge computing set to redefine how we perceive and utilize data.